Full Autonomy Has Arrived? Community Reactions to GPT-5.3 Codex Launch

The developer community hails GPT-5.3 Codex as the dawn of 'full autonomy.' With massive benchmark gains over 5.2, a new steering feature, and the self-building narrative, the excitement is real — though API unavailability and cybersecurity concerns remain.

On February 5, 2026, OpenAI released GPT-5.3 Codex, calling it "the most capable agentic coding model to date." The new model combines GPT-5.2 Codex's coding performance with GPT-5.2's reasoning and professional knowledge capabilities, while also being 25% faster. It even carries the title of being the first model that helped debug its own training. So how did the developer community react?

1. "Full Autonomy Has Arrived"

AI agent developer Matt Shumer published a review titled "Full Autonomy Has Arrived" on his blog. He called GPT-5.3 Codex "the first coding model I can start, walk away from, and come back to working software." He reported that runs lasting over 8 hours maintained context and completed tasks without degradation.

The biggest upgrade Shumer identified over 5.2 was 'judgment under ambiguity.' When prompts are missing details, the model's assumptions are "shockingly similar to what I would personally decide." Previous versions tended to choose "the fastest plausible path to success" unless constraints were extremely explicit. With 5.3, the model makes the right calls even without those guardrails.

2. Benchmarks and New Features vs GPT-5.2

| Benchmark | 5.3 Codex | 5.2 Codex | Change |

|---|---|---|---|

| SWE-Bench Pro | 56.8% | 56.4% | Slight increase |

| Terminal-Bench 2.0 | 77.3% | 64.0% | +13.3%p |

| OSWorld | 64.7% | 38.2% | +26.5%p |

| Cybersecurity CTF | 77.6% | 67.4% | +10.2%p |

The 77.3% score on Terminal-Bench 2.0 represents a massive leap in terminal proficiency essential for coding agents. The 64.7% on OSWorld approaches the human baseline of roughly 72%, meaning the model's ability to perform productivity tasks by operating a computer screen has nearly doubled from the previous generation. While SWE-Bench Pro showed only a marginal gain, token consumption decreased — achieving the same performance at lower cost.

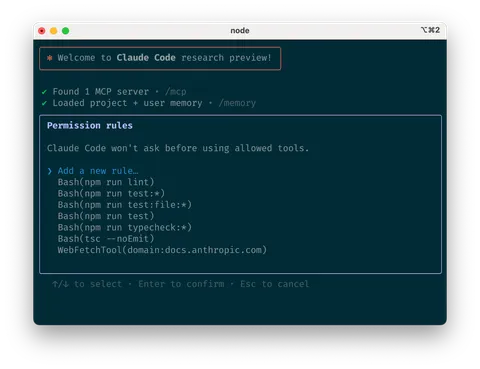

The new 'steering' feature is another key change. Instead of submitting a prompt and waiting for the final result, users can now intervene mid-task to ask questions and correct the model's direction. It's like delegating work to a colleague and checking in along the way.

The most talked-about aspect is the 'self-building AI' narrative. OpenAI revealed that the Codex team used early versions to debug the model's own training, manage deployment, and even dynamically scale GPU clusters on launch day.

3. Developer Community Cheers

On Reddit, one user wrote: "I've always hated Codex and only used 5.2 high and xhigh. But 5.3-codex-xhigh is amazing. I've built more in 4 hours than I have in the last week." The OpenAI Developer Community was flooded with reactions like "feels like being a kid, and getting GTA 4 for the Xbox 360" and "This February feels like Christmas!"

Discourse co-founder Sam Saffron highlighted the model's test-driven development (TDD) tendency: "It almost insists to write failing tests prior to building features. Great move by OpenAI." Shumer echoed this: "With clear validation targets, GPT-5.3-Codex will iterate for hours without losing the thread. Without tests, it is excellent. With tests, it becomes a different class of tool."

Code quality assessments were also notable. Shumer observed that compared to 5.2, the output features "cleaner architecture, fewer hacky patches, fewer subtle bugs accumulating over time. It usually leaves the codebase in much better shape."

4. Speed Praised, But No API Access Yet

Speed improvements over 5.2 have been generally well-received. OpenAI claims a 25% speed boost, and Discourse's Sam Saffron confirmed that "5.3 is definitely proving to be faster." Token consumption is also down, meaning better cost efficiency. Some users reported sluggishness immediately after launch — one OpenAI community member wrote "probably 3x slower than 5.2 codex" — but this appears to be a temporary server load issue.

The biggest complaint is the lack of API access. While GPT-5.3 Codex is available through the Codex app, CLI, IDE extensions, and web for paid ChatGPT users, the API remains closed. This means businesses cannot integrate Codex into their own products, and pricing remains unclear. The model being the first classified as 'High' for cybersecurity under OpenAI's Preparedness Framework is the suspected reason for the delay. Fortune reported that "the coding breakthrough is forcing OpenAI to rethink how fast it can deploy its most powerful models."

Final Thoughts: "I Don't Know What to Do With Myself"

The most striking line from Shumer's review: "It's so capable that I sometimes don't know what to do with myself while it's running. That's a weird problem to have." GPT-5.3 Codex isn't perfect — there's no API, and cybersecurity concerns loom large. But the leap from 5.2 to 5.3 isn't just a performance upgrade. It establishes a new benchmark for coding agent autonomy. The age of AI you can start and walk away from has arrived.

- matt shumer - My GPT-5.3-Codex Review: Full Autonomy Has Arrived

- OpenAI - Introducing GPT-5.3-Codex

- Fortune - OpenAI's new model leaps ahead in coding capabilities—but raises unprecedented cybersecurity risks

- OpenAI Developer Community - Introducing GPT-5.3-Codex—the most powerful, interactive, and productive Codex yet

- eesel.ai - Our complete GPT 5.3 Codex review: A new era for agentic AI